Translation Application¶

The Translation example demonstrates the implementation of language translation using OPEA component-level microservices.

Table of contents¶

Architecture¶

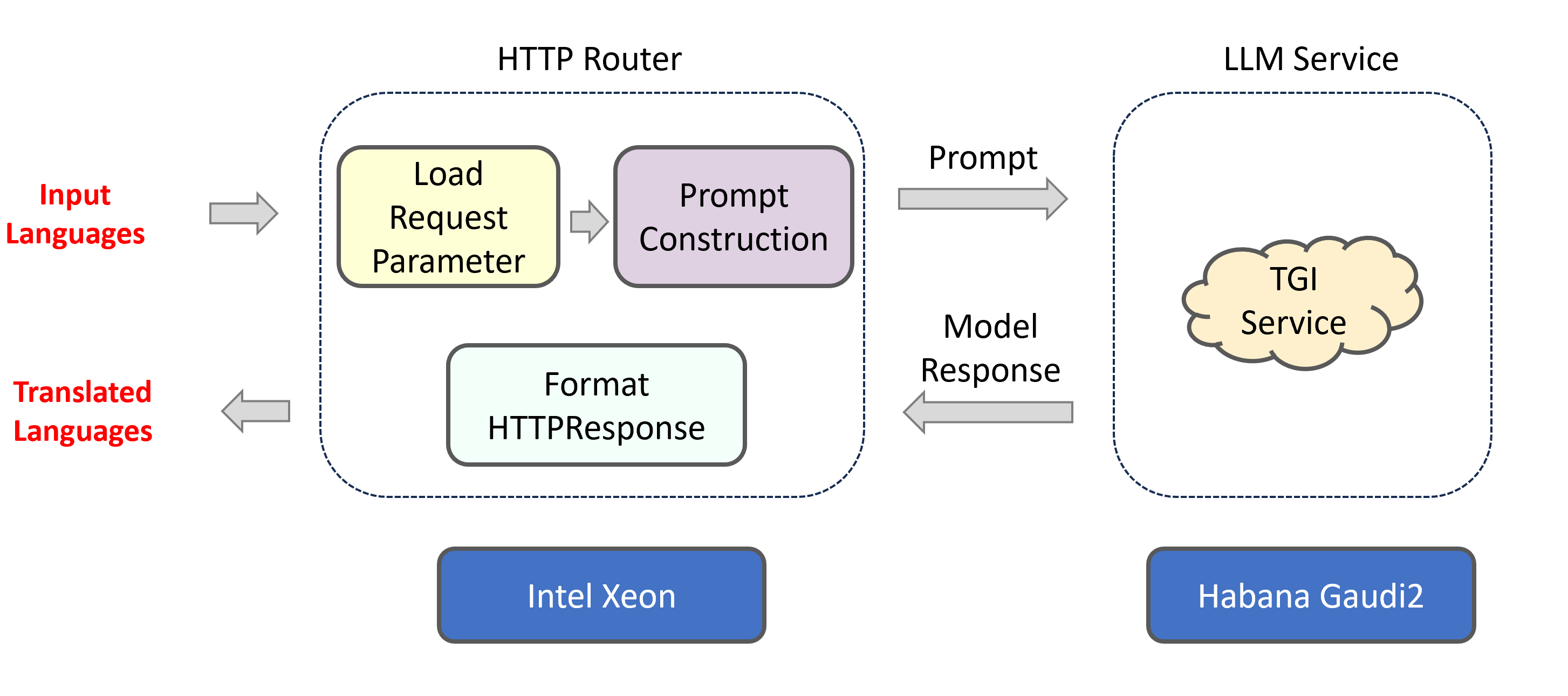

The architecture of the Translation Application is illustrated below:

The Translation example is implemented using the component-level microservices defined in GenAIComps. The flow chart below shows the information flow between different microservices for this example.

This Translation use case performs Language Translation Inference across multiple platforms. Currently, we provide the example for Intel Gaudi2, Intel Xeon Scalable Processors and AMD EPYC™ Processors, and we invite contributions from other hardware vendors to expand OPEA ecosystem.

Deployment Options¶

The table below lists the available deployment options and their implementation details for different hardware platforms.

Platform |

Deployment Method |

Link |

|---|---|---|

Intel Xeon |

Docker compose |

|

Intel Gaudi2 |

Docker compose |

|

AMD EPYC |

Docker compose |

|

AMD ROCm |

Docker compose |

Validated Configurations¶

Deploy Method |

LLM Engine |

LLM Model |

Hardware |

|---|---|---|---|

Docker Compose |

vLLM, TGI |

haoranxu/ALMA-13B |

Intel Gaudi |

Docker Compose |

vLLM, TGI |

haoranxu/ALMA-13B |

Intel Xeon |

Docker Compose |

vLLM, TGI |

haoranxu/ALMA-13B |

AMD Xeon |

Docker Compose |

vLLM, TGI |

haoranxu/ALMA-13B |

AMD EPYC |

Helm Charts |

vLLM, TGI |

haoranxu/ALMA-13B |

Intel Gaudi |

Helm Charts |

vLLM, TGI |

haoranxu/ALMA-13B |

Intel Xeon |