ChatQnA Docker Image Build¶

Table of contents¶

Build MegaService Docker Image¶

To construct the MegaService with Rerank, we utilize the GenAIExamples microservice pipeline within the chatqna.py Python script. Build the MegaService Docker image using the command below:

git clone https://github.com/opea-project/GenAIExamples.git

git fetch && git checkout v1.3

cd GenAIExamples/ChatQnA

docker build --no-cache -t opea/chatqna:latest --build-arg https_proxy=$https_proxy --build-arg http_proxy=$http_proxy -f Dockerfile .

Build Basic UI Docker Image¶

Build the Frontend Docker Image using the command below:

cd GenAIExamples/ChatQnA/ui

docker build --no-cache -t opea/chatqna-ui:latest --build-arg https_proxy=$https_proxy --build-arg http_proxy=$http_proxy -f ./docker/Dockerfile .

Build Conversational React UI Docker Image (Optional)¶

Build a frontend Docker image for an interactive conversational UI experience with ChatQnA MegaService

Export the value of the public IP address of your host machine server to the host_ip environment variable

cd GenAIExamples/ChatQnA/ui

docker build --no-cache -t opea/chatqna-conversation-ui:latest --build-arg https_proxy=$https_proxy --build-arg http_proxy=$http_proxy -f ./docker/Dockerfile.react .

Troubleshooting¶

If you get errors like “Access Denied”, validate microservices first. A simple example:

http_proxy="" curl ${host_ip}:6006/embed -X POST -d '{"inputs":"What is Deep Learning?"}' -H 'Content-Type: application/json'

(Docker only) If all microservices work well, check the port ${host_ip}:8888, the port may be allocated by other users, you can modify the

compose.yaml.(Docker only) If you get errors like “The container name is in use”, change container name in

compose.yaml.

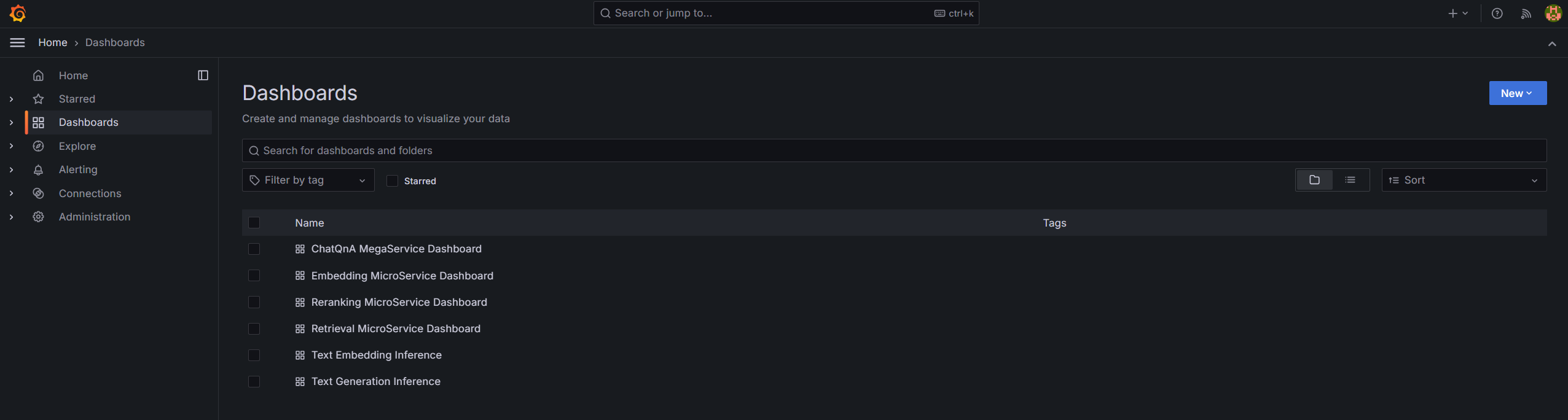

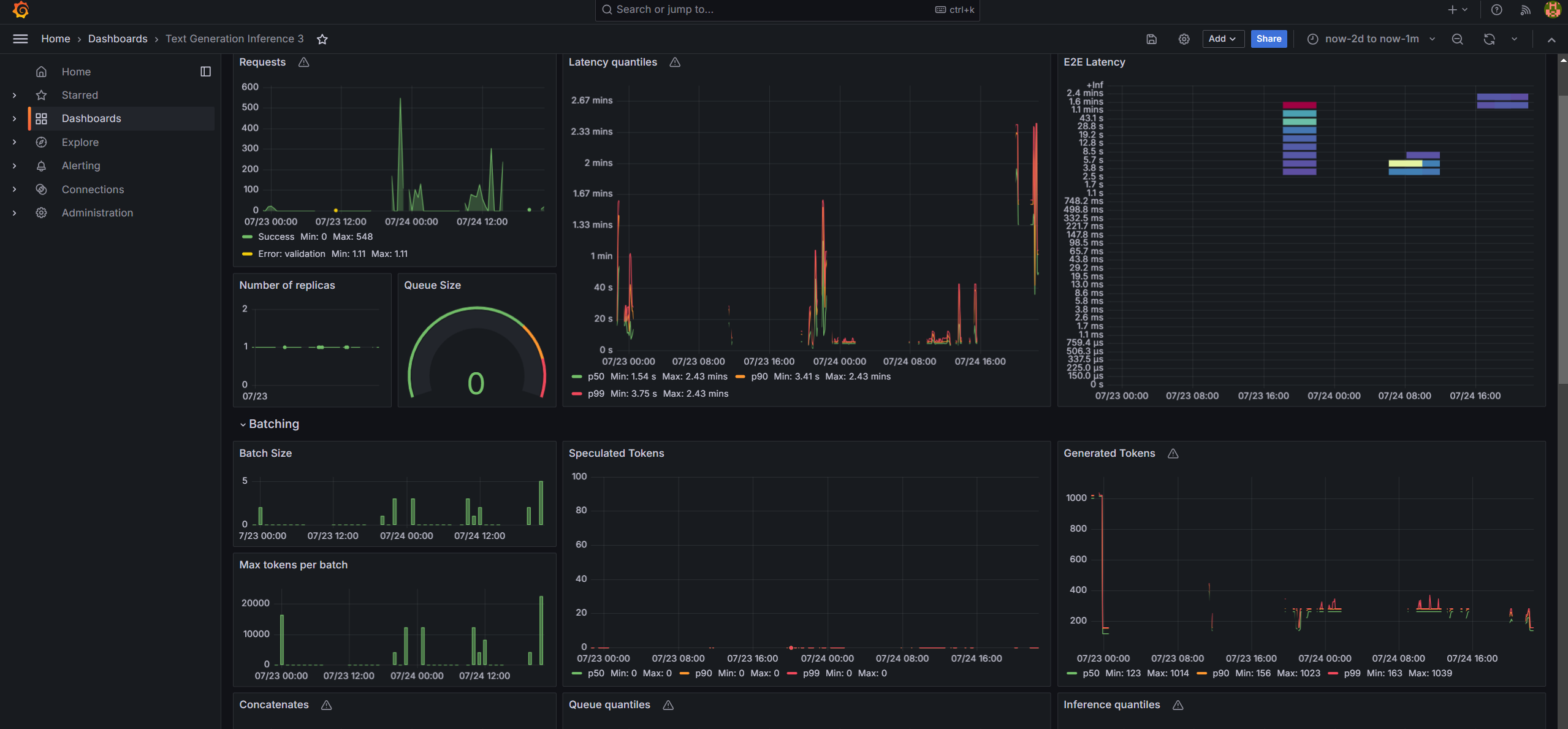

Monitoring OPEA Services with Prometheus and Grafana Dashboard¶

OPEA microservice deployment can easily be monitored through Grafana dashboards using data collected via Prometheus. Follow the README to setup Prometheus and Grafana servers and import dashboards to monitor the OPEA services.

Tracing with OpenTelemetry and Jaeger¶

NOTE: This feature is disabled by default. Please use the compose.telemetry.yaml file to enable this feature.

OPEA microservice and TGI/TEI serving can easily be traced through Jaeger dashboards in conjunction with OpenTelemetry Tracing feature. Follow the README to trace additional functions if needed.

Tracing data is exported to http://{EXTERNAL_IP}:4318/v1/traces via Jaeger. Users could also get the external IP via below command.

ip route get 8.8.8.8 | grep -oP 'src \K[^ ]+'

Access the Jaeger dashboard UI at http://{EXTERNAL_IP}:16686

For TGI serving on Gaudi, users could see different services like opea, TEI and TGI.

Here is a screenshot for one tracing of TGI serving request.

There are also OPEA related tracings. Users could understand the time breakdown of each service request by looking into each opea:schedule operation.

There could be asynchronous function such as llm/MicroService_asyn_generate and user needs to check the trace of the asynchronous function in another operation like

opea:llm_generate_stream.